PERSPECTIVE

Preserving artistic integrity while creating AI art

6 September 2023 (this article was pre-published on 19 August 2023) – Vol 1, Issue 1.

As an artist, I am looking for a process in creating AI art where I could feel comfortable signing my name over the works, calling them ‘my creations’. Integrity is crucial for the artistic process. I am definitely not looking for a so-called ‘digital muse’. But how can we attain integrity when the process by which AI creates images is not clear? Does AI use elements taken from other works/images that it was ‘trained’ on, composing a mosaic from components of other artists’ works?

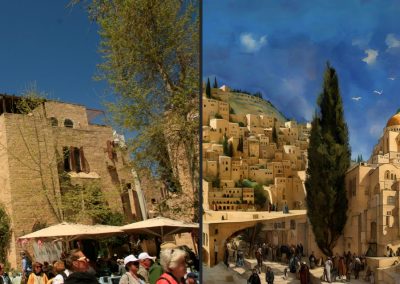

Which work is real, and which is AI? Left: ‘Gabriel’, a real-life artwork made with encaustic wax on card, by my wife, Natalie Dekel, 2011. Right: I created ‘Flame Lady 2’, AI-generated image based on ‘Gabriel’ and on prompt (text instruction) which is based on my poetry combined with one of our meditation’s transcripts. Photoshop was also used.

Luckily, AI does not collage other people’s works, rather it creates completely new art, drawing the piece from scratch, pixel by pixel. However, AI does so based on millions of other artworks it was trained on in a process that may have infringed on people’s copyrights. We need to wait to hear the results of two current lawsuits put forward against the start-up Stability AI (one lawsuit by three artists[1] and another by Getty Images.[2])

‘Remembering the Future’, 2023. This is another AI work I generated from the original art ‘Gabriel’ (see above).

As we are waiting for clear answers, I will share here a solution I have come to. This solution covers copyrights; it satisfies my need for integrity as an artist, and the need to put my creative flair into the AI works to be able to call them ‘my creations’. I will share the artworks and how I created them. I will explain the process by which AI generates images, and I will go through copyright issues. The AI tool I use is BlueWillow, a text-to-image AI generator, which is an alternative to the more popular Midjourney.

Do I own the copyrights on my AI works?

BlueWillow Terms of Service[3] indicate that on their free version “… you own the Assets you create”. This means that BlueWillow releases the copyrights to the user. As an artist, I do need to hold the copyrights over my creations.

Left: ‘Tree of Fresh Light’, real-life encaustic wax art on card, by Natalie Dekel, 2018. I have used this work, together with text from my poetry, to generate the AI image, ‘Eve, Mother of All Living’, 2023 (Right). Photoshop was also used to superimpose the dove (cloned from a second version of this AI art).

However, the terms also state that creators give BlueWillow a license to re-use the images. Likewise, the free version allows other users to see and re-use any image created: “you may use the Assets created by other users and/or customers of BlueWillow but only assets generated on public channels”.

As a solution to this, I started to pay a subscription (5$ a month) giving me a private channel which does not share my work with other users. This means that no one can see or reuse my work. BlueWillow cannot use the works either, as the Terms state “As between you and BlueWillow, you own the Assets you create, and BlueWillow will have no rights or licenses to use Assets created using our paid Services”. So, with the paid subscription the creator owns the art that they create.

Left: A photo I took in SEA LIFE London Aquarium, which I used (together with poetic text prompt) to generate the AI ‘I Am Once Again in the Pulse of My Heart’, 2023 (Right).

How does AI generate images?

So, how was AI ‘trained’ from images on the internet? And, does it operate like an art student who learns to paint, and then draws something original from scratch, using the skills they acquired? Image-generative AI tools were trained on millions (some say billions) of images online. The images were copied from the internet and saved on the training computers.

Left: original work, ‘Passion’, real-life encaustic-wax art on card, by Natalie Dekel, 2009. This work, together with text from my poetry, was used to generate the AI image, ‘Cave to the Self’, 2023 (Right).

The prompt text I used in BlueWillow in creating ‘Xzaia #5’, 2023, was a story I wrote about a figure I call Xzaia (pronounced Za-Ya). As for an image reference for BlueWillow, I have generated a few AI images based on ‘Passion’ (see above). One image was ‘Cave to the Self’ (above). There were other images created. I then chose one of the AI images and used it as a reference in BlueWillow for creating ‘Xzaia #5’. In a way, we can say that ‘Xzaia #5’ is not the child of ‘Passion’ but its grandchild… Photoshop was also used to correct facial features.

The start-up company Stability AI “…copied over five billion images from websites…” to train its system, Stable Diffusion,[4] in a process called diffusion. Diffusion means creating a series of copies of the same image, each time adding random visual noise, in steps, until we have an image with only noise visible (“diffused”). At each step, the AI model learns the relationship between the added noise and the resulting changes in the image. In other words, the AI model is recording how the added noise has changed the image. With this knowledge, the system now reverses the process, i.e. it starts with the diffused image which is full of noise.

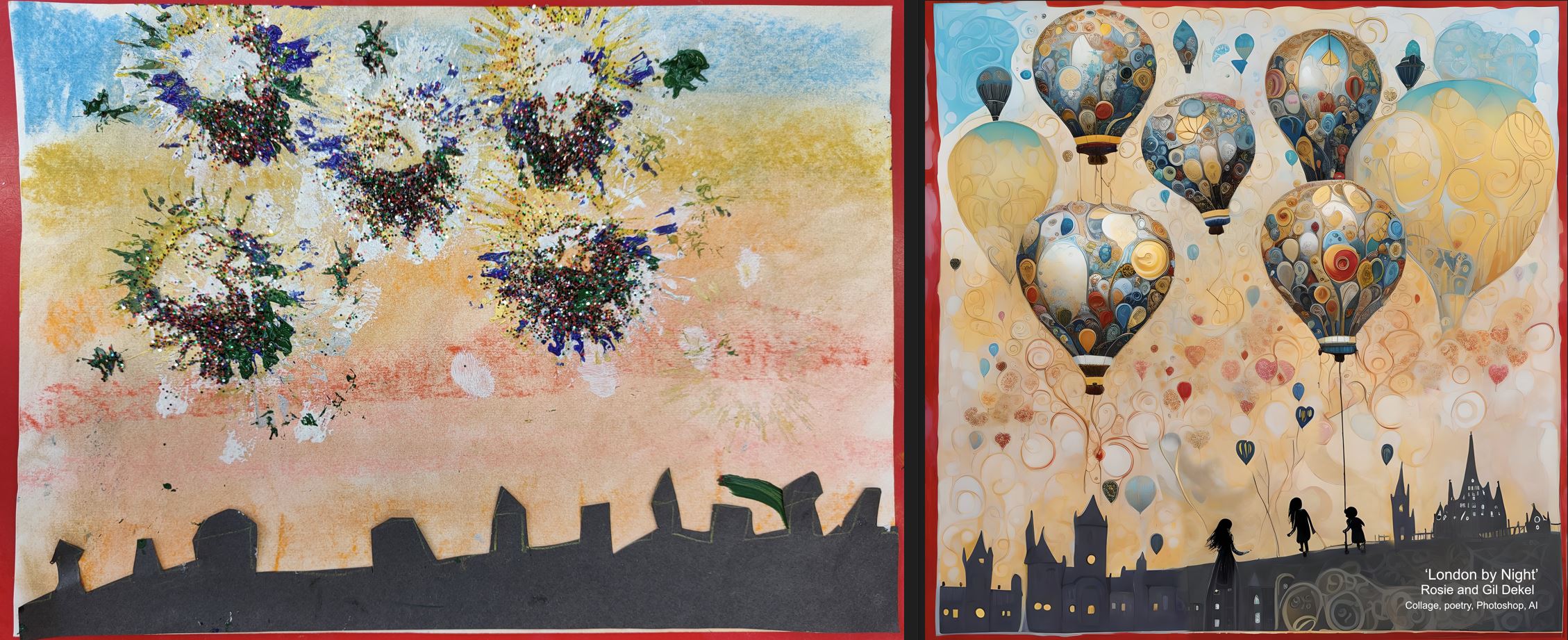

Left: Collage, chalk and paints, by 6-years old Rosie, 2023. Right: AI ‘London by night’, 2023.

The system removes the noise (denoising), in steps, refining the image, to create an image as close as possible to the image in the previous step. It continues in steps, until it creates an image that closely resembles the original image. The AI model uses its learned knowledge of noise patterns to ‘denoise’ the image – it learns the relationships between noise and image features, and it can ‘clean out’ noise by iteratively adding and then removing the noise. AI does not reveal the original image, but rather it draws it anew, pixel by pixel. The process is not perfect but reaches good results. This training process was repeated on millions (or billions) of images. The system also learned other relationships, such as the relations in shapes, sizes, colours and distances between the iris and the pupil of a human eye.

Left: ‘Grounding’, 2013, by Natalie Dekel. Right: ‘Two Halves Moon’, AI, Photoshop, poetry, 2023.

In 2022 researchers developed a process called conditioning, allowing to add text instruction (prompt) to the denoising process. This enabled the system to retrieve images based on their tags. For example, if researchers typed a prompt with the words ‘house and a tree’ the system could now retrieve images that were labelled with house and tree (showing house and tree), adding house and tree to the denoising process, and redrawing them. This is the technology that enables us to type prompts into BlueWillow and Midjourney.

AI and Photoshop ‘Jerusalem City of Gold #6 Third Temple’, 2023, based on ‘Grounding’ (above).

Another development was a method for compressing images. This allowed training the system on smaller images, rather than using the original images that are larger in size. With smaller size images, the system could train faster.

So, the main point is that AI draws images from scratch, and yet it is not clear what is the copyright status, and the intellectual property rights over the images used originally to ‘train’ it. BlueWillows states they will “remove any Assets that appear to infringe on the intellectual property rights of others…” but they do not say if their own process infringes on the intellectual property rights of others…

Left: ‘Rafael’, encaustic wax, by Natalie Dekel, 2019. Right: ‘Jerusalem #3’, AI, Photoshop, 2023.

Using my own work to generate AI arts.

My current solution is to train AI on original real-life works that I and my wife have been creating. Not just our visual works but also our texts – scripts from our guided meditation videos, and from my poetry. This text becomes the prompt I use (the instructions that tell BlueWillow what to draw).

Here is an example of the process:

To generate the prompt, for the artwork below, I started out by reading scripts from our guided meditations. I copied some inspiring segments of texts from a few scripts and then asked ChatGPT to combine them, and describe them as one visual image. ChatGPT generated quite a long story, fitting more to a script of a short animation film with a few scenes. I had to edit and cut the text down, so that it is more descriptive visually, and depicts a single image, not a series of scenes.

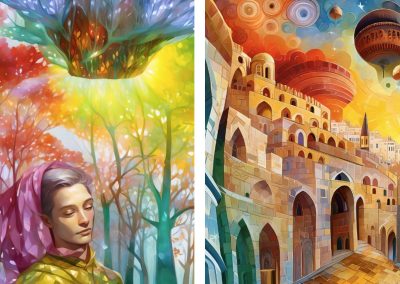

Left: ‘Forest of Love’ (detail), encaustic wax on card, by Natalie Dekel, 2010. Right: ‘And She Was Telling the Secrets of the Forest’, AI, Photoshop, 2023.

The other reason to shorten the text was that BlueWillow has a word count limit of 70 words, at the moment. My prompt, below, has more than 70 words, so BlueWillow probably ignored the excess of words, but I am sharing it here as I have used it:

A forest, bright gold sunlight filtered through the thick canopy of emerald leaves, stood a figure, dressed in modern-day cloth and looks like a modern person. The figure exuded an aura of cosmic energy that [sic], a radiant gem glowed with an otherworldly brilliance, pulsating. From this mystical gem, threads of shimmering light extended outward, like cosmic tendrils reaching out to embrace the vastness of the cosmos. Each thread was a different hue, representing the infinite facets of existence: the crimson of passion, the azure of wisdom, the opaline glow of creativity, and the emerald of nature’s harmony. As these threads of light swirled and danced, they intertwined with the surrounding flora and the figure’s head in luminescence. Beyond the figure, the realm opened up into a breathtaking expanse of cosmic skies, 7 chakras, mystical. Vast skies.

I fed this prompt to BlueWillow, together with the original artwork created by Natalie. In that way, BlueWillow had two references of instructions: Natalie’s original art, and the poetic text. Both references are original works by my wife and myself.

Two further works developed from ‘Forest of Love’ (detail). Left: ‘The Fairy Dragonfly Dream’, 2023. Right: ‘Jerusalem City of Gold #33’, 2023.

It may seem that AI generates the perfect image in one go. The truth is that for each work I am sharing here, I went through dozens of iterations and images generated, most of which I consider not good artistically or anatomically. Within a timeframe of 3 weeks, I have generated over a thousand images, with most of them having some issues (mainly anatomical). In this article, I am only sharing the pinnacle… some of the best works…

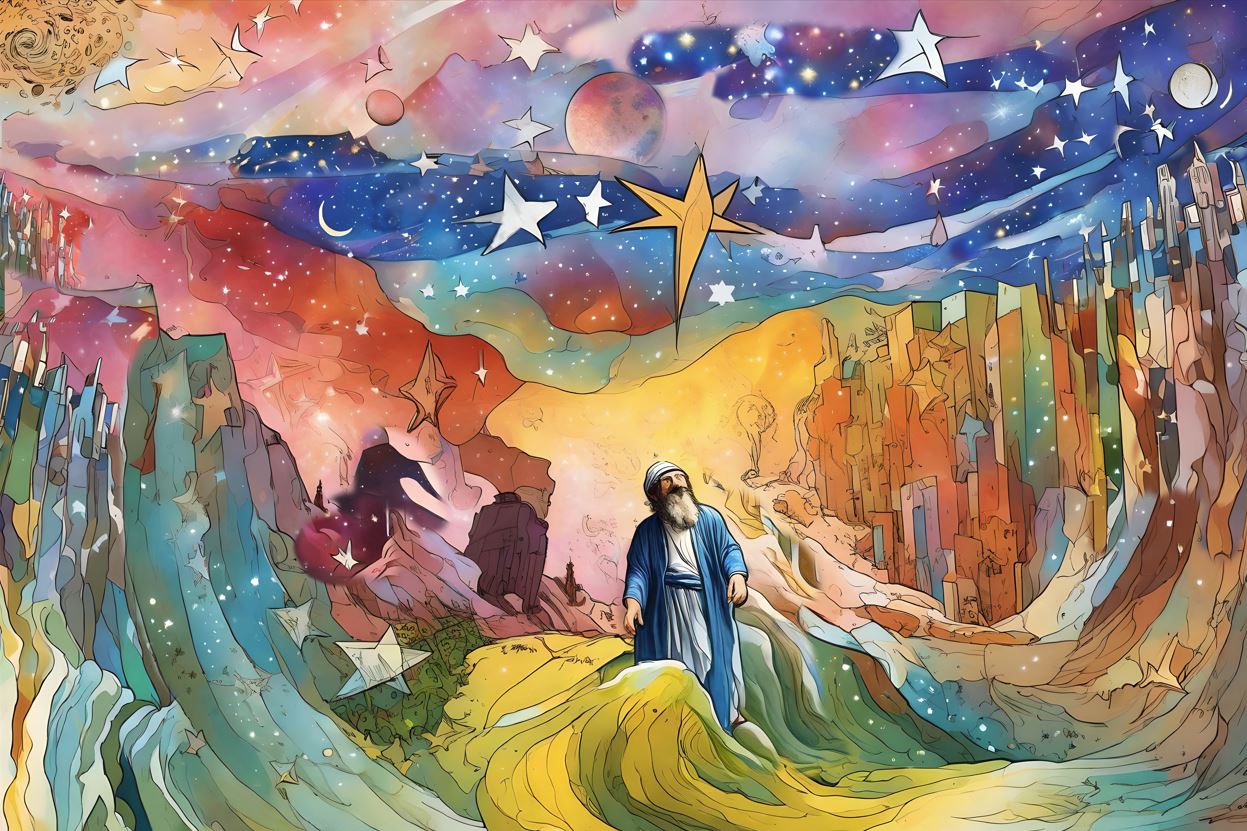

AI ‘Abraham’, 2023. Another work based on ‘Forest of Love’ (detail), poetry, biblical passages, and using Photoshop to collage a few AI versions into this final version.

In most experiments, I had to Photoshop the AI images, until I reached the final result. I had to clean visual noise, errors, and distortions of figures that were not consistent with the artistic style of the work. I acknowledge that distortion of figures is a significant art form in itself (see: Lucian Freud), yet only when it is consistent with the style of the whole composition. AI tends to struggle with hands, creating too many fingers, or hands coming from inside other hands, which is not coherent with the style of the complete work.

In some works, like ‘Abraham’ above, I combined elements from a few versions of the same work, generated by AI. For example, the sky is taken from one version, and the mountains are from another. I also used Photoshop’s clone tool to improve the inner composition, so there were some artistic decisions I made, beyond AI’s own decisions.

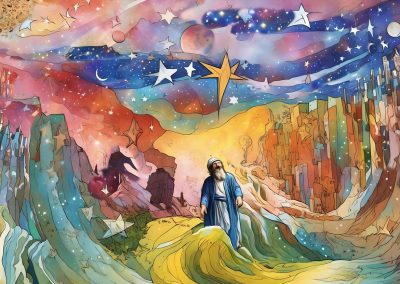

Left: ‘Bouquet from Heaven’, encaustic wax on card, by Natalie Dekel, 2009. Right: ‘Moses Splitting the Sea’, AI, Photoshop, biblical passages, poetry, 2023.

I have also found it useful to optimise images before feeding them to BlueWillow. By Photoshopping images, I created the perfect composition I was looking for, and only then I would feed that image to BlueWillow. For example, it was easier to add a figure in Photoshop, and then upload that photo as a reference to BlueWillow, rather than trying to prompt BlueWillow to generate a figure in a certain size, style, and location on the image. So, I did some preparatory work on images before the AI work commenced. This may seem quite the opposite process to what AI stands for. AI stands for prompting text, which then produces images, and not working with Photoshop on images, to then feed into AI…

I estimate that the process of producing each work took one hour, considering I already have a vast catalogue of own arts/images to ‘train’ AI, and own visionary texts to use as prompts. It would take longer than an hour if I had to write the text from scratch and create images to feed AI.

Left: ‘For Mother’ (detail), encaustic wax on card, by Natalie Dekel, 2010. I created a few AI versions from this art. I then used one of the versions to train AI to create the image on the right: ‘The Chakras Dream’, AI, meditation text as prompt, and Photoshop, 2023.

As the AI works are based on my wife’s original artworks, and the prompt texts on our original guided meditations and poetry, I am satisfied that the amount of original contribution we put into the AI works is sufficient to call them ‘ours’. When describing the media of the AI image, I would list the original real-life work, the poetry or meditation transcripts as prompt, AI, and Photoshop.

Another way to describe is to simply say ‘Mixed media (traditional and digital)’. However, while AI can be seen as ‘digital’, the word ‘digital’ does not clearly indicate the use of AI, so such a description may be misleading. It is important that we clearly state if there was a use of AI. Perhaps in the near future, AI will become so common that people would associate it with the word ‘digital’.

Here is another example of the process, with the text prompt I have used:

Left: ‘Bronze Flowers’, encaustic wax on card, by Natalie Dekel, 2009. Right: ‘Adam and Eve’, AI, biblical passages, poetry, meditation transcript, Photoshop, 2023.

I have been browsing through many works my wife has created, trying to find the perfect one to train AI for an image that will depict Adam and Eve. I chose ‘Bronze Flowers’, as the shiny bronze/gold colours looked mythical to me. The abstract shape in the centre of the work is surrounded by colours (light and darkness). To my imagination, this composition seemed fitting to a couple I envisioned in the centre, engulfed with gold, light and shadows.

Thus, the first step was to decide which original work should be used to train AI. This is a process that the artist needs to go through. AI cannot do that job for you.

The subject I chose was Adam and Eve, so I started the prompt text by typing “At the centre of the garden of Eden, the birth of Adam and Eve.” I then asked Google Bard to pull out any text about the Earth from my poems (since Adam was born from the Earth). There are a few poems about the Earth, yet Google Bard did not do a great job of collating them. I had to scrap Bard’s response on this occasion.

Natalie’s original work was created as a message about giving and receiving love in all its forms, so I decided to portray Adam and Even as a couple intertwined by their hearts. I fed BlueWillow the original art and the following text, which had some minor typo errors, as I was typing too quickly. After the AI image was created, I corrected those minor typos, and the corrected version looks like so:

At the centre of the Garden of Eden, the birth of Adam and Eve. Abstract, visionary. They emerge from the Earth, holding hands. Looking at each other. 1 centimetre away from each other. From their heart glowing gold light, touching. Where they touch a gold flower emerges. Above them, a celestial radiating light, threads of different colours. The Earth a radiant, surrounded by an aura of life and magic, adorned with flowing greenery and vibrant flowers. Sun from the bottom right of the image. Light and gold swirling around the image. The air is scented with the fragrance of blooming flowers and the soft whispers of ancient wisdom. –ar 3:2

‘Inner Glow Garden’, AI, photoshop, poetry, 2013. Another work based on ‘Bronze Flowers’.

What can humans do that AI could never do?

Dr. Catherine Breslin has suggested[5] that AI images can replace artists in creating art to illustrate or fill a gap in blog posts or newsletters. In these cases, people do not necessarily care too much about the images since they just illustrate a post. Artists, on the other hand, are driven by a need to say something about the world with their art. This is something that AI cannot do. AI does not have a stance or opinion. It is not trying to say something with the art that it creates. Rather, it is just trying to put something together that matches what it has seen in its training data, and which matches the prompt.

Indeed, AI does not have a message to share. It does not have emotions and no life experience. It lacks a soul.

Left: photo from art session with nature, 2019. Right: three versions of AI images, for the series ‘Jerusalem of Gold’, 2023. I intend to merge all three images into a single image at one point.

AI opens new opportunities for writers.

While ChatGPT is capable of writing texts and may be said to take over writers’ jobs, I think that the opposite will happen. AI prompts are based on words. Image-generated AI requires specific, well-written text descriptions. Writers can capitalise on this opportunity, as clients will now need to learn how to write better; how to compose relevant and artistic prompts to reach a more desired result.

It is true that ChatGPT can generate text for prompts for Midjourney/BlueWillow, so users do not even need to write the prompts themselves. ChatGPT 4 has an option to add plugs, designed to optimise it for writing prompts for Midjourney. However, ChatGPT has a certain writing style, and it generates repetitive texts. A million users will end up generating quite the same prompts.

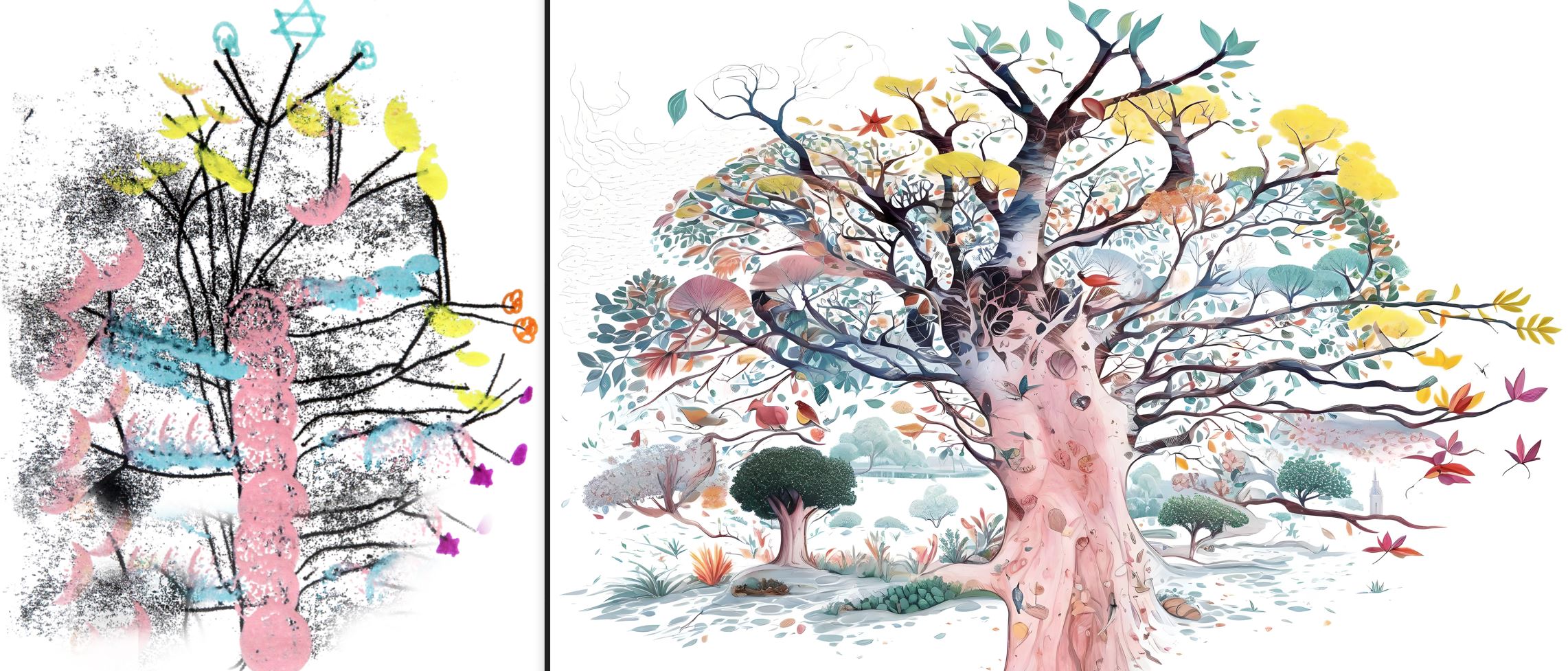

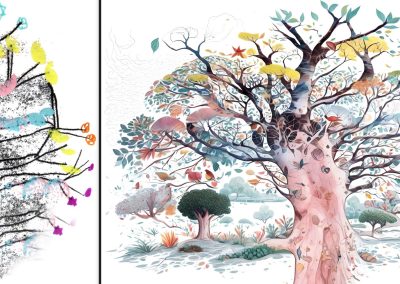

‘The Pink Tree’. Left: acrylics on paper, by Nicole Dekel, 2022. Right: AI, with the same title, 2023.

There is a growing need for excellence in prompt writing, so to be able to differentiate AI works. Moreso, I believe that creating images with AI is easy and cheap. The market will respond to this and will soon start to consider images as cheap products. The innovation will become the prompt – the text used to create the images. In other words, the real creative value will not be in the AI image itself, but in the text prompt, in the magnificent transformation of text into an image. After all, God created the world with words…

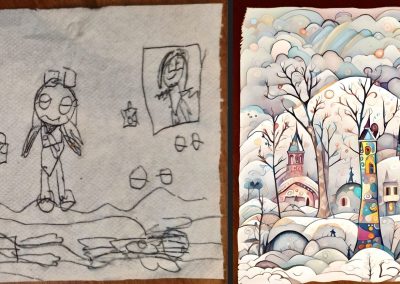

Left: Pen drawing on tissue paper, by 10-years old, Nicole, 2022. Right: ‘The Tower on the Edge of the Clouds’, AI, 2013. Apart of the composition and flowing lines (in the mountain, sky) there is quite a leap from the original to the AI work. I recall the prompt I used since it was a single word: ‘Colourful’… With not much instruction (just a single word) and with the original art as a reference, AI decided on the subject (nature, castles) by itself.

The market will soon require more writers that can produce text based on something unique, on something specific to the users/client, to achieve unique prompts. Maybe writers could also start teaching how to be creative; how to connect to the inner muse (not the digital muse), so to dwell on personal experience to produce unique writing. In this way, AI (text-to-text and text-to-image) is going to increase the need for quality human writers.[6]

It seems that AI brings flourishing opportunities to the profession of writing, as much as it brings innovation, albeit controversial, to the visual world.

Left: Photo of Jerusalem, by Gil Dekel, 2019. Right: ‘Jerusalem City of Gold #2’, AI, Photoshop, 2023. I made extensive use of Photoshop to collage a few versions of AI images into this final version.

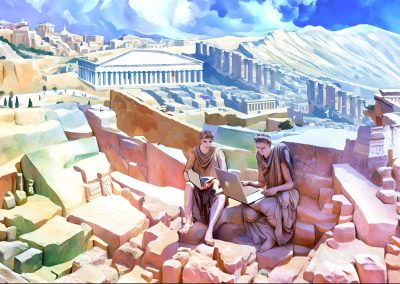

Left: photo of historical museum, by Gil Dekel, 2023. Right: ‘Atlantis’, AI, 2023.

Left: photo of 3D craft in a shoe box, by 10-years old Nicole, 2023. Right: ‘Magic Fairy Land’, AI, 2023.

Left: ‘Karmel Fairy’, collage, pencil, acrylics, by Natalie Dekel, 2020. Right: ‘Xzaia 1’, AI, Photoshop, 2023. Elaborate text, based on a story I wrote, was used as the prompt.

References and Notes

[1] BlueWillow (2023) Terms of Service. Accessed 8 August 2023, from: https://www.bluewillow.ai/terms-of-service

[2] Butterick, Matthew. (2023). ‘We’ve filed a lawsuit challenging Stable Diffusion, a 21st-century collage tool that violates the rights of artists’. Accessed 12 August 2023, from: https://stablediffusionlitigation.com/

[3] Samuelson, Pamela. (2023). ‘Generative AI meets copyright’. Science, Vol 381, No. 6654. 13 July. Accessed 18 August 2028, from: https://www.science.org/doi/full/10.1126/science.adi0656

[4] Class-action complaint. Page 13 [15], note 57. Accessed 12 August 2023, from: https://stablediffusionlitigation.com/pdf/00201/1-1-stable-diffusion-complaint.pdf

[5] The Independent (2023). ‘How much of a threat does AI really pose?’ [Webinar]. 17 August.

[6] I recently had a dream that illustrates this. I saw a modern futuristic city, with skyscrapers in the shape of yellow pencils… A pencil-skyscrapers modern city. The analogy is that the future may be technological, but will still require traditional writing skills. As a side joke, I tried to generate an AI image to visualise this dream (using prompt text only) but could not reach a good result.

Doctor Gil Dekel holds a PhD in Art, Design and Media, from The University of Portsmouth. His speciality is in processes of creativity and inspiration in artmaking. Dr. Dekel is Associate Lecturer at the Open University, Reiki Master/Teacher, and a visionary artist. He co-wrote ‘The Energy Book’.

Click to view large images in gallery:

Gil Dekel holding a print of AI art 2023. Photo: Natalie Dekel.

Last updated 26 Sep 2023. Video embedded 9 May 2024.

A very interesting article – and absolutely beautiful artworks (both the originals and the AI-generated). It is very impressive to see how innovators such as yourself have worked with this emerging technology and are already developing your own techniques and styles to such great effect, considering how new this technology is.

As you say, the ability to write creatively and to convey mood, style, emotion, uniqueness, story etc is clearly fundamental to the final artwork. But it is also very interesting to note how many iterations you had to discard before you were satisfied with your finished artwork (after further photoshopping etc). So quality human interventions are still very much key!

The issue of copyright will be a fascinating subject to follow and your discussion of how you attribute your works is very helpful. Thank you!

You have a very talented family! I enjoyed the article. It was thought-provoking.

Did it take you long to toy with BlueWillow to get the style you wanted. Also did you experiment with a more granular/textured style, or did BlueWillow want to ‘denoise’ most of the time because of how it was trained?

I also think your ideas about creativity and new opportunities are kind and optimistic. Perhaps the AI art generators bear some comparison to the invention of photography, which people said would be the death of art, and it was/wasn’t – in many respects, because it put a whole profession of average/kitsch decorative painters out of business in the first half of the twentieth century. But on the other hand, out of the other end came the more dedicated artists, and artists permeating into other industries (like fashion and marketing), and so art and creativity carry on.

Thanks.

BlueWillow was trained on our works, so it copied our artistic style. It is still not easy to get an exact style only by the use of text alone (prompts).

So exciting and inspiring, is there a platform for learning AI art skills?

Basically, you wish to learn art skills, and then separately learn how AI tools work (plenty of online videos/guides.)