What is language, and how human constraints shape it?

5 July 2024 – Vol 2, Issue 3.

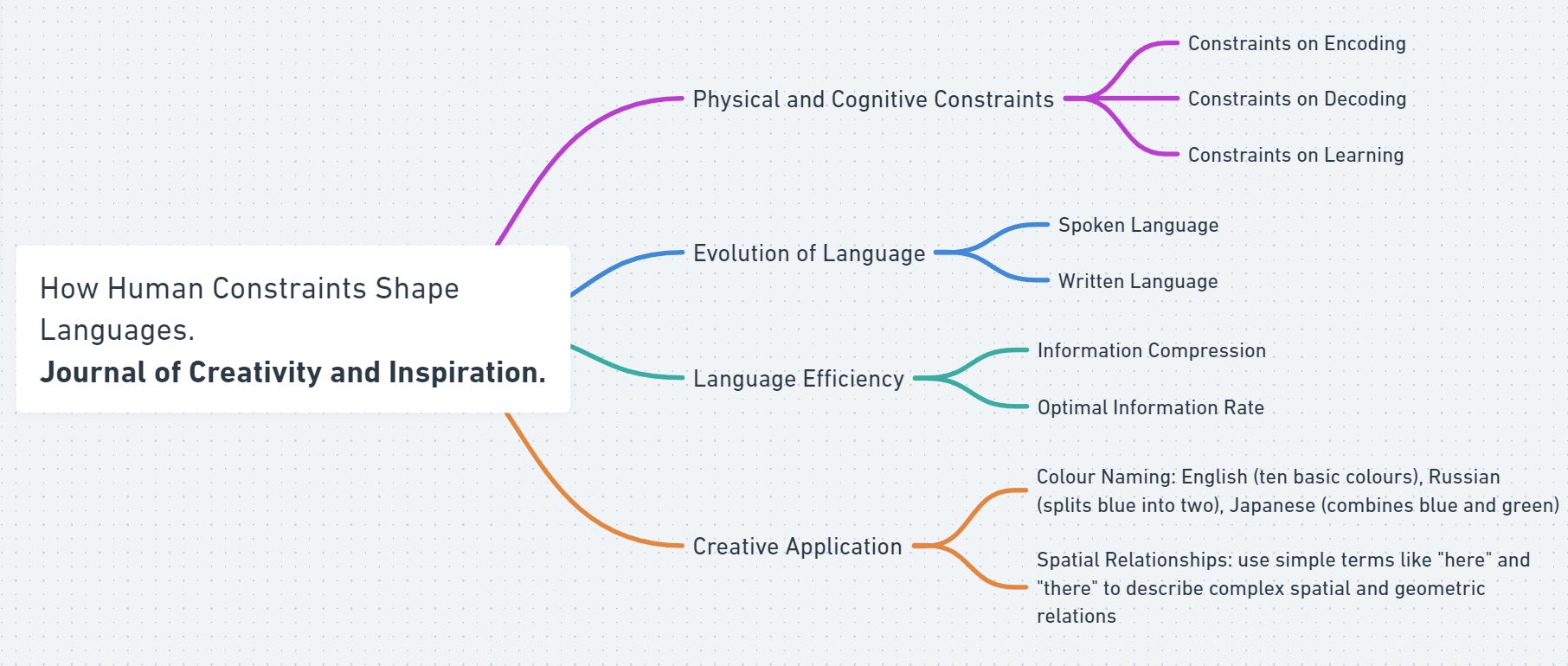

Language is an example of human creativity, evolving from our ability to adapt and innovate within constraints. This article outlines how the limitations of our physical and cognitive capacities have shaped diverse and efficient systems of languages.

In his seminal book on how visual and perception work in the brain, David Marr left us with a powerful framework for understanding complex adaptive systems, from vision to bird flight to language. As he had it:

“Trying to understand perception by studying only neurons is like trying to understand bird flight by studying only feathers: it just cannot be done. In order to understand bird flight, we have to understand aerodynamics; only then do the structure of feathers and the different shapes of birds’ wings make sense.” (Marr, 1982: 27)

Figure 1. ‘Languages’, by AI / Gil Dekel.

The point is this: to understand something like bird flight or vision or language, a purely mechanical approach is not going to work. You are just not going to figure out how wings work by looking only at feathers, although you certainly should look at them. You have to consider the function of the system as a whole and the constraints under which it operates. The various shapes of bird wings are solutions to the problem of flying under constraints of aerodynamics, power usage, weight, flexibility, and so on.

So, from this perspective: what is language? How can we understand language as we would understand the totality of bird’s flight using wings? What is language’s function, and what are the constraints that shape it?

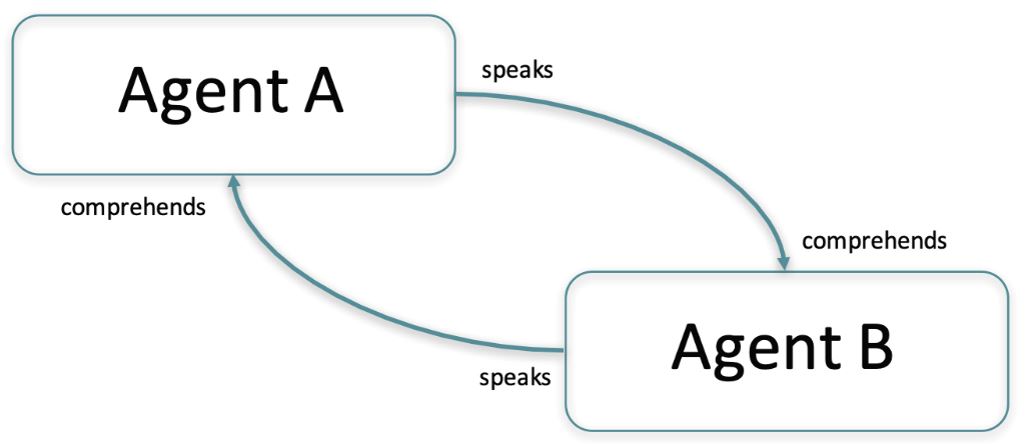

Language is a code that enables the kind of communication illustrated in Figure 2 below: an agent, A (say, me) encodes its thoughts into an utterance and speaks it. Another agent, B, receives the utterance and decodes the underlying thought as best it can. Agent B can use the same code to communicate back to Agent A. The whole process has to operate under three sources of constraint, which are tied up in our physical existence as humans. First, it has to be possible for a human brain to encode thoughts into utterances. Second, it has to be possible for a human to decode thoughts from utterances. And third, the code itself has to be learned from sparse, limited data during childhood: children learn language from limited and incomplete information; they don’t hear every possible sentence or word combination but still manage to understand and use language effectively.

Figure 2. Agent A speaks (encodes thoughts into an utterance of language), and Agent B comprehends that speech (decodes the utterance into thoughts). The speaking and comprehending steps are both points where constraints on language live.

These constraints – encoding, decoding, and learning from sparse input – are all the more severe when we consider how language evolved. For the vast majority of the history of Homo sapiens—up until around perhaps 3300 BCE —there was no writing, nor even the idea of writing. All language was spoken language, meaning that the encoding and decoding steps all had to happen in real time in real environments. Yet, far from hamstringing human language, these stringent constraints have actually bred creativity: in this case, the flowering of diverse and elaborate languages from Sumerian to Zulu, each with its own trade-offs and ingenious solutions to the problem of communicating complex thoughts.

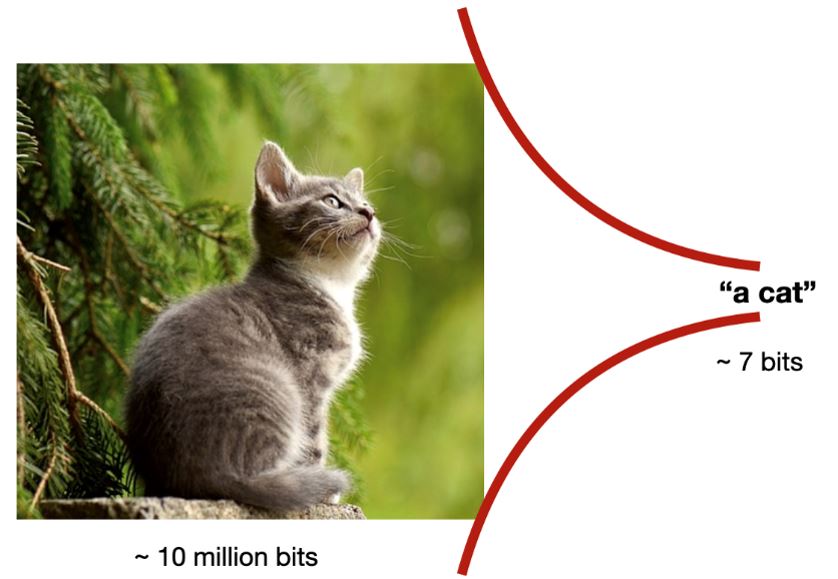

Just to give a sense of the level of constraint that language is under, consider how you would describe the picture in Figure 3. You might say it’s “a cat”, translating your visual percept of the image into a short utterance. Now the image itself, which you’re describing, contains about 10 million bits of information (reckoning at 4 bits per pixel, near the limit of what humans can perceive). The utterance “a cat” in English contains about 7 bits of information. When you say “a cat”, you are compressing the image down to 7 bits, nearly nothing compared to the starting point of 10 million bits. And this is typical for language: its actual information content is incredibly paltry. Regardless of what language they are speaking, people speak at a constant information rate of around 40 bits per second (Coupé et al., 2019)—spitting out syllables faster in Japanese and slower in English to maintain a constant rate of information transfer across language, which is apparently a human constraint on how fast we can encode meanings into movements of our vocal tract. At that rate, it would take four days of talking, without pause or sleep, to convey the full 10 million bits information content of the image, if you ever wanted to do such a thing.

Figure 3. Language as extreme lossy compression. The image contains about 10 million bits of information, and the utterance that describes it – ‘a cat’ – contains about 7 bits.

And yet, despite being constrained to contain almost no information, language consistently contains the important information. When we encode the image into 7 bits of language, the compression is of course highly lossy – the original information is significantly reduced, leading to some loss of detail and precision. No one will be able to reproduce the exact image given the utterance, or indeed any real image from any natural language utterance. But they will get the most important and relevant information needed for understanding and taking appropriate action. We are able to somehow find the most important 7 bits among the 10 million, at a rate of 40 bits per second. Language is severely constrained, but it operates nearly optimally under those constraints.

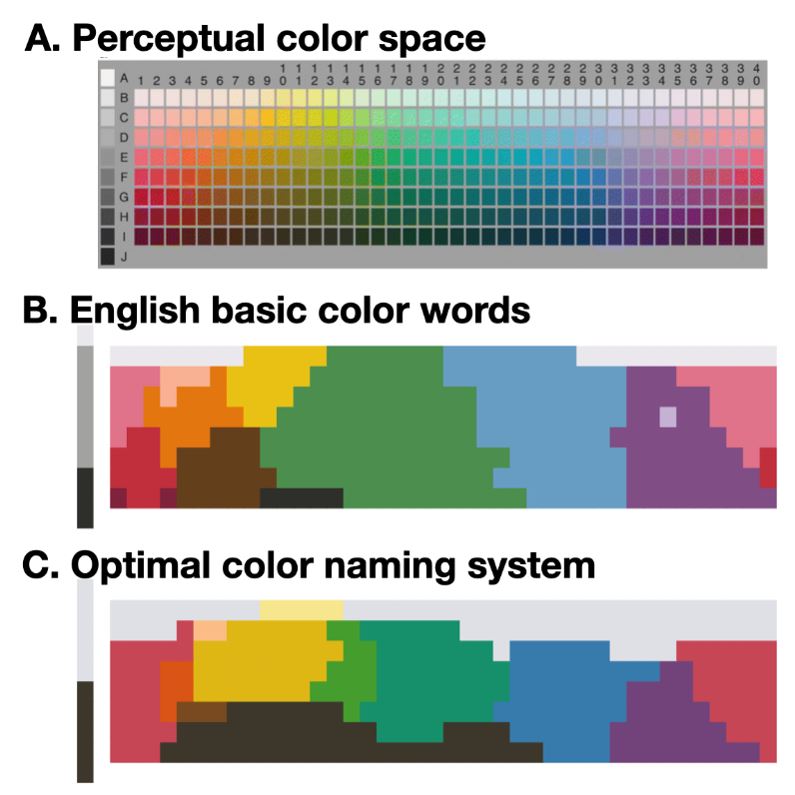

Consider our words used for colours. The actual percept of colour is three-dimensional, defined by hue, saturation, and value. A two-dimensional reduction is shown in the grid in Figure 4A (only the colours with the highest saturation given their hue and value). Describing the percept losslessly would require (at least) three real numbers. And yet human languages describe colour using a small set of basic colour words. For example, English has ten: white, black, red, orange, yellow, green, blue, purple, pink, and brown. (Notice that a basic colour word is a word that cannot be considered a shade of any other. For example, mauve is not a basic colour word because most people would say that mauve is a shade of purple. But green is a basic colour word, because no one would say that green is a shade of blue or yellow or anything else.) Other languages have more or fewer: for example, Russian splits English blue into two words – goluboy (the colour of the sky) and sinii (the colour of a sapphire), and many languages such as Japanese combine blue and green into the same category, which linguists call grue. The languages with the fewest words for colour have only two (warm and cool) or three (white, black, and red—what we would call blue would be called a shade of black). These languages are often spoken by tribal groups in non-industrialized environments, where there are very few paints and pigments that can imbue objects with arbitrary colours.

Figure 4. A. The Munsell colour space, a representation of all colour percepts at maximum saturation. B. The English colour naming system as a partition of the Munsell colour space. C. Optimal colour names given the constraint of conveying around 3 bits of information about meaning per word. Panels B and C are reproduced from Zaslavsky et al. (2018).

Why do we have the colour words we do—not only in English, but also in Russian, Japanese, and Dani? The English colour system conveys about 3 bits of information per word—again, nearly nothing in the scheme of things. But it allocates those 3 bits optimally, as the following analysis from Zaslavsky et al. (2018) shows. We can use information theory to derive a boundedly optimal assignment of colour percepts to words—one which conveys colour percepts as accurately as possible given human perception, while expressing only (say) 3 bits of information per word. Figure 4B shows how English partitions the continuous colour space into basic colour words, based on a survey by Berlin and Kay (1969), and Figure 4C shows the information-theoretically derived optimal colour naming partitions, given the constraint that the words contain only 3 bits of information about their meanings.

We see a remarkable alignment between English and the optimal solution. What’s more, if we ask for a boundedly optimal system with a tad more information, we get the Russian system (splitting the blues), and if we ask for a tad less, we get the Japanese system (lumping together blue and green). If we ask for a boundedly optimal system with much less information (say, a little more than 1 bit), we get the white/black/red systems that are widely attested in tribal societies. The diversity of human colour naming systems is reproduced simply by tracing out the systems that communicate about colour most effectively subject to different constraints on the amount of information. This difference between languages presumably goes back to how important colour is in the different cultures and environments of these languages’ speakers.

We see this pattern not only in names for colours, but also in words for spatial relationships. Think of all the richly complex spatial and geometric relations that are possible between objects, and how we compress these in language into here and there (Chen et al., 2023), and in the way that we mark features like time on verbs, for example, English distinguishes present and past on verbs in pairs like jump and jumped (Mollica et al., 2020). Again and again, languages partition meanings into words in ways that allow maximally accurate communication given severe constraints.

As we see in art, so in nature: some of the most intricate and impressive things are in fact the results of constraints. Human language has recently evolved (compared to, for example, our internal organs), and repurposes our vocal tract for an entirely new function. It is no surprise that language is highly constrained, and we cannot communicate information at anything like the rate of data transfer between computers. Yet it is exactly that high level of constraint that enables so much creativity.

At a glance:

© Journal of Creativity and Inspiration.

Images: Figure 1 – AI / Gil Dekel. Figures 2-4 – Richard Futrell.

Richard Futrell is an Associate Professor in the UC Irvine Department of Language Science where he leads the Language Processing Group. He studies language processing in humans and machines using information theory and Bayesian cognitive modeling. Richard also works on NLP and AI interpretability.

References

Berlin, B., and Kay, P. (1969). Basic Color Terms: Their Universality and Evolution. University of Claifornia Press.

Chen, S., Futrell, R., & Mahowald, K. (2023). An information-theoretic approach to the typology of spatial demonstratives. Cognition, 240, 105505.

Coupé, C., Oh, Y. M., Dediu, D., & Pellegrino, F. (2019). Different languages, similar encoding efficiency: Comparable information rates across the human communicative niche. Science Advances, 5(9), eaaw2594.

Marr, D. (2010). Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. MIT Press.

Mollica, F., Bacon, G., Zaslavsky, N., Xu, Y., Regier, T., & Kemp, C. (2021). The forms and meanings of grammatical markers support efficient communication. Proceedings of the National Academy of Sciences, 118(49), e2025993118.

Zaslavsky, N., Kemp, C., Regier, T., & Tishby, N. (2018). Efficient compression in color naming and its evolution. Proceedings of the National Academy of Sciences, 115(31), 7937-7942.