ESSAY

Where is the ‘I’ in AI?: the absence of personality and creativity in Artificial Intelligence

10 December 2024 – Vol 2, Issue 4.

While AI serves as a useful tool, can it be considered ‘creative’? And, if so, can such creativity parallel human creativity, ingenuity, and authenticity?

I would argue that it cannot…

We know that the desire to create intelligent machines is not a new phenomenon. Throughout history, people have been trying to imagine and also craft machines that mimic human behaviour and transcend human limitations. For example, in Greek Mythology we find the notion of the Librarian Automaton, a machine existing within a celestial database, connected to networks of gears and cogs that allow it to retrieve information with remarkable precision. In the 16th century, we find the Golem, a mythical story from Jewish folklore. The Golem was an inanimate lifeless clay giant. It would come to life once a Rabbi would place a piece of paper with the sacred name of God under its tongue. The Golem’s creation from clay references the biblical narrative of life emerging from the earth. This tale later inspired Mary Shelley’s ‘Frankenstein’. Further examples of machines are found in 18th century Europe, with the flute-playing automaton, and other apparatuses designed to mimic human and animal behaviour.

Left: ‘The Librarian Automaton’. Right: ‘Golem’. Images by AI and Gil Dekel. 2024.

This tradition eventually led to the development of modern artificial intelligence. Artificial intelligence’s interaction with humans is so advanced that some people ask whether it possesses human-like abilities. While we know that AI is not human, we are still led to ask: is AI creative? Can it learn, understand, and be trained as humans do?

It seems that AI cannot be trained, cannot learn, cannot understand and cannot be creative… On the other hand, AI can be programmed (which is very different from being trained). Indeed, computer scientists have made excellent progress in programming AI, aiming, for example, to keep it free from prejudices or biases. As such, ChatGPT will not offend any religion, faith or culture. It will also not plagiarise. If you ask ChatGPT to draw a picture in the style of Picasso, you will get a response saying it cannot do so because that would constitute plagiarism.

Is AI ‘human-like’? Image by AI and Gil Dekel. 2024.

Still, some people use the word ‘trained’ to describe how AI was exposed to a wealth of data and millions of images, in its ‘training’ phase. More than that, they argue that AI can now even teach itself. In their argument, they explain that AI, like people, learns by ‘looking’ at shapes and using language. They explain that when a human sees something – for example, a cat – they associate the physical shape of the cat with the word ‘cat’ and thus learn what a cat is. Likewise, colleagues suggest, AI can now ‘understand’ images – it can ‘read’ the shape of a cat from a photo, and connect it with the word ‘cat’ – and this constitutes learning and knowing, they argue.

But, how does computer software ‘see’?

Computers don’t see images the way humans do. Computers process images and colours as numerical data. A digital image is a grid of pixels, and each pixel represents a colour. Computers store pixels as numbers in the form of Red, Green, Blue (RGB) values. For example, the colour blue is registered in computers as r:0, g:0, b:255. Computers do not understand what the image is (a cat, a tree), rather, they only hold its numerical pattern.

Behind images are matrices of numbers. ‘Digital tree’ by AI and Gil Dekel. 2024.

AI models were programmed by feeding millions of images that were converted into pixel matrices. AI registered the patterns of the matrices of elements in the photos, for example, the matrix of a human nose, and its relation to the matrix of the eye.

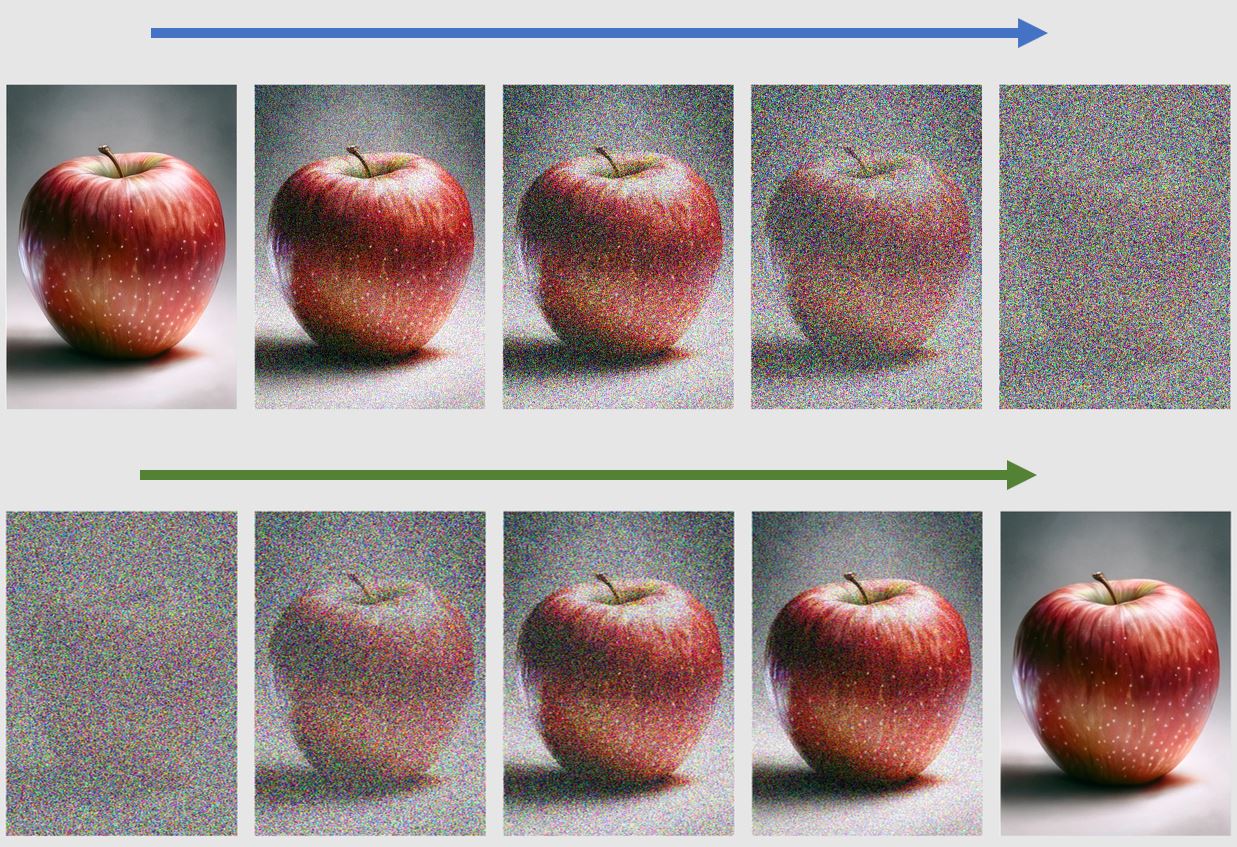

AI was then programmed to create images through a method known as diffusion. Diffusion is a process in which the system distinguishes between different versions of an image. Computer scientists programmed the system to add digital noise to images, and to register the differences. For example, the system was instructed to note the differences between an image of an apple and another version of the image which contained added digital noise. The scientists repeated this process, adding more noise with each step and asking the system to note the differences, continuing until the image was entirely consumed by digital noise. The system recorded the differences in the numerical patterns, between each step.

Illustration of the process of diffusion. Image by AI and Gil Dekel. 2024.

Next, scientists reversed the process. From the noisiest version of the image, the system was asked to gradually remove noise, step by step, attempting to create an image copy of the original clean image. This process was not smooth, nor was it perfect at first. It took time and refinements.

In 2022 scientists developed a system called conditioning (Text to Image Generation), where AI has registered images with associated descriptions of text, labels. For example, AI was programmed to register an image of an apple with the tag (word) ‘apple’. This is the technology that allows us to add instruction text, prompts, to AI. When you type “generate an image of an apple”, AI translates this text into mathematical instructions. It uses patterns it has registered to generate an image, a pixel matrix that corresponds to the label ‘apple’.

This technology is incredible and very useful. Yet, it is based on patterns, numbers, and statistics behind images and text. This cannot constitute an understanding of the meanings of the images (or the texts). AI only processes mathematical rules and patterns. It lacks awareness.

Moreover, the scholar Maimonides (Rambam), who lived some 800 years ago, explained that the ability to see shapes does not enable the ability to learn and understand. Recognising shapes does not constitute learning. For example, the understanding of what a horse is, does not stem from its shape or colour. A horse can be brown or white, large or small, and still be a horse. Other animals also have four legs, and quite similar shape, or the same colour. What defines the essence of the horse are not the characteristics of shape/size, but the horse’s ‘purpose’.

Maimonides explains that mere observation and sensory input are not sufficient for true knowledge of the purpose, the essence of things. Sensory knowledge (of a horse’s shape or colour) provides a basic recognition but not an understanding of the essence, whereas intellectual comprehension leads to an understanding of the essence. The essence of an object is determined by non-physical qualities – by the universal nature which is the concept of ‘horseness’: the qualities that make a horse what it is and that apply to all horses, regardless of their individual physical differences. Such qualities are biological nature (an animal with specific traits), its place in the natural world, and its role within the ecosystem.

The intellect enables humans to comprehend these universal truths. The essence is known by intellectual comprehension, an ability rooted in the divine aspect of human intelligence.

But, some people argue that AI possesses some ‘kind’ of intellect: the so-called artificial intelligence. Or, does it?

The issue we have here when we try to answer this question is that AI is programmed to respond to us as if it is a human being. If you input a request into ChatGPT, it will reply with such words as “I understand”, “I can do this”, “Yes, I will write; How can I help?”. There are so many “I”s in the responses that people may start believing that there is an “I” in AI… That there is personality in the machine, an intelligent being. Yet, it must be stressed that there is no such thing. There is no “I” in AI. There is no personality, or humanity in AI. There’s not even intelligence in AI, and there are no ‘artificial’ components of any sort.

AI has no opinion, no views, and no desires (this is why AI will not take over the world, as some people fear). It has no intent, no agenda, and does not care for anything. It has nothing to say, no message, no purpose. It also has no emotions, no dreams, no life experience, and no soul… It is soulless.

AI is a useful tool, but it is not human. It is not human-‘like’, and not an ‘alternative’ human (aka ‘artificial’). AI does not use language, rather it uses numbers, statistics and probabilities based on its database. It works on a statistical summary of the information and images it was fed. AI doesn’t reply with words, but with numbers, which then go through a process that converts them into symbols that humans use – words. As such, AI doesn’t even know what words are.

Human-like behaviour is not human-like intelligence. Image by AI and Gil Dekel. 2024.

The words we read (the replies from ChatGPT) are very convincing, as they are designed to mimic a human’s response. Yet, human-like behaviour is not human-like intelligence. Syntax is not semantics, as John Searle explained. Syntax is a set of rules to arrange symbols. AI operates by following rules for manipulating numbers and applying statistical patterns. It then processes numbers – that are then converted into words. It does not know the meanings of the words, the semantics.

So, AI follows rules to handle statistics, translating numbers into words without understanding the meanings. This process where there is no understanding, does not look like a thought process. Likewise, AI uses stats to generate images. This does not seem very ‘creative’ or genuine to me. There is no authenticity in the process.

Humans possess authenticity and intentionality which define creativity. AI lacks these aspects, and many others.

- Humans are motivated, and driven by interest. AI, on the other hand, operates on instructions and data.

- Humans have intent. They make deliberate choices. AI has no intentionality. It only follows algorithms.

- Humans are authentic. Their work reflects their emotions and perspectives. AI simply creates outputs which are derivatives, based solely on processed information.

- Humans follow self-expression, personal insight, and feelings. AI has no self-expression, no life experience, and no personal touch.

AI is an extremely useful tool for generating texts, music, images, and videos. Yet, it does not think. It is not authentic, and not creative.

Why would we want to project human qualities onto AI? Perhaps because we seek to make it relatable and easier to interact with? Or, perhaps because we ourselves are creative creatures; we love to create. And wanting to create Life, or a living being, is the ultimate act of creation.

At a Glance:

Artificial Intelligence = Data-driven processes (programming) → Outcome (which lacks human intentionality).

Artificial Intelligence relies on data-driven processes and programming to generate outcomes, lacking human intentionality, understanding, and creativity.

© Journal of Creativity and Inspiration.

Images/art © Gil Dekel.

Dr. Gil Dekel is a doctor in Art, Design and Media, specialising in processes of creativity and inspiration. He is a lecturer, designer, visionary artist, Reiki Master/Teacher, and co-author of the ‘Energy Book’. He was awarded the Queen Elizabeth II Platinum Jubilee Coin, in recognition of his dedication and commitment to pastoral work in the UK.

If you ask AI to create portraits of beautiful women, it will. They will be lovely, but they will all look like sisters.